1PICO IDL ByteDance, 2Beijing University of Posts and Telecommunications

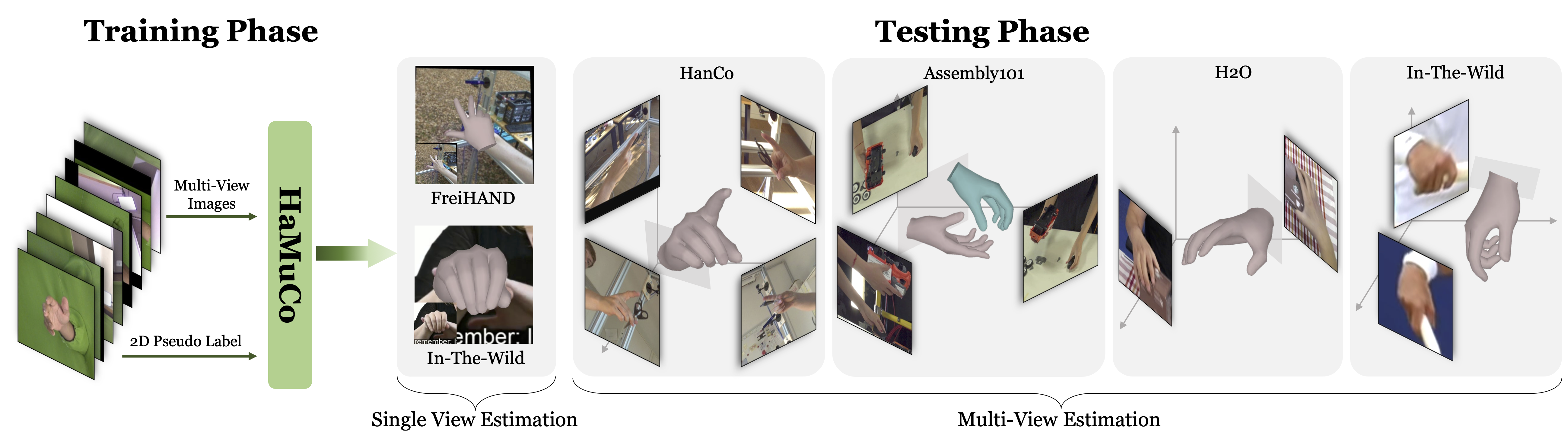

Our method takes multi-view images with 2D pseudo labels for training.

Our method can estimate accurate 3D hand pose with single- or arbitrary multi-view images.

Abstract

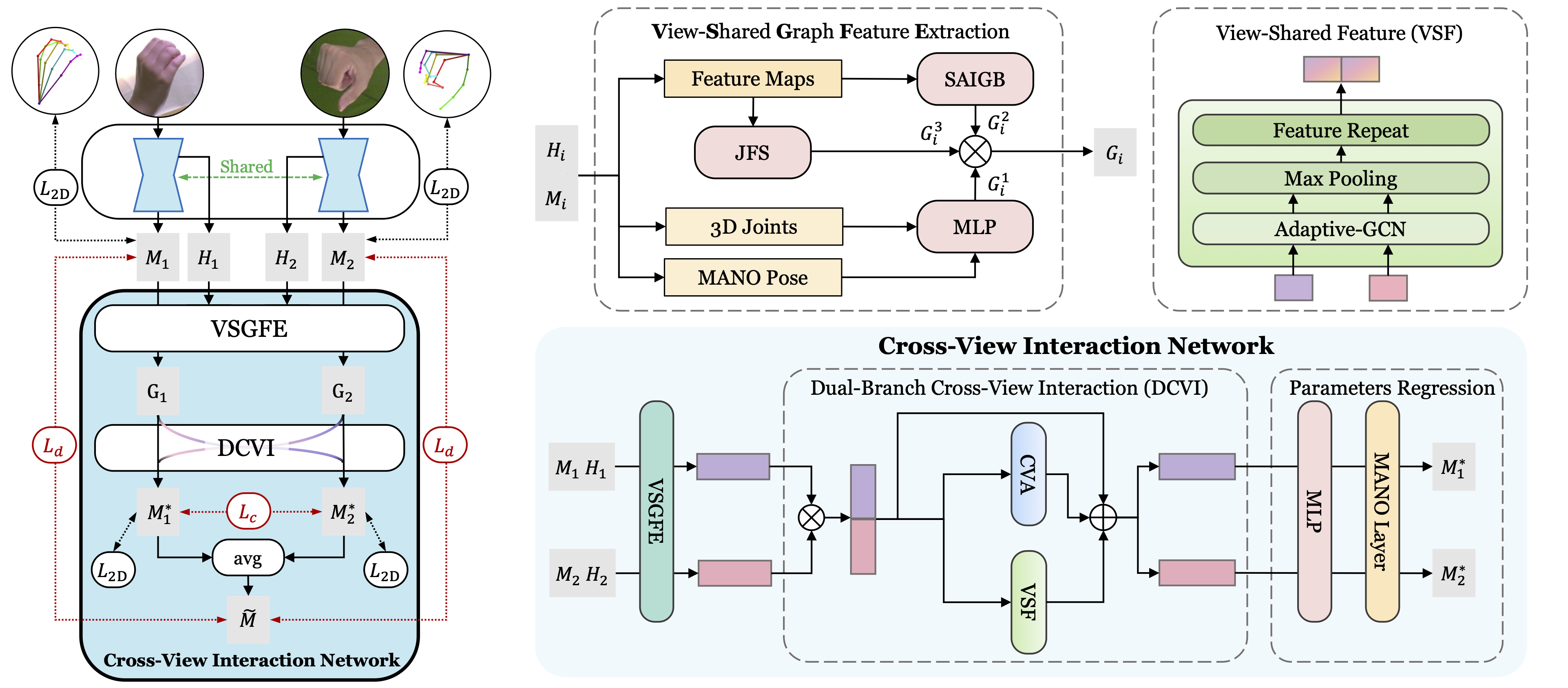

Recent advancements in 3D hand pose estimation have shown promising results, but its effectiveness has primarily relied on the availability of large-scale annotated datasets, the creation of which is a laborious and costly process. To alleviate the label-hungry limitation, we propose a self-supervised learning framework, HaMuCo, that learns a single view hand pose estimator from multi-view pseudo 2D labels. However, one of the main challenges of self-supervised learning is the presence of noisy labels and the “groupthink” effect from multiple views. To overcome these issues, we introduce a cross-view interaction network that distills the single view estimator by utilizing the cross-view correlated features and enforcing multi-view consistency to achieve collaborative learning. Both the single view estimator and the cross-view interaction network are trained jointly in an end-to-end manner. Extensive experiments show that our method can achieve state-of-the-art performance on multi-view self-supervised hand pose estimation. Furthermore, the proposed cross-view interaction network can also be applied to hand pose estimation from multi-view input and outperforms previous methods under same settings.

Video

Overview

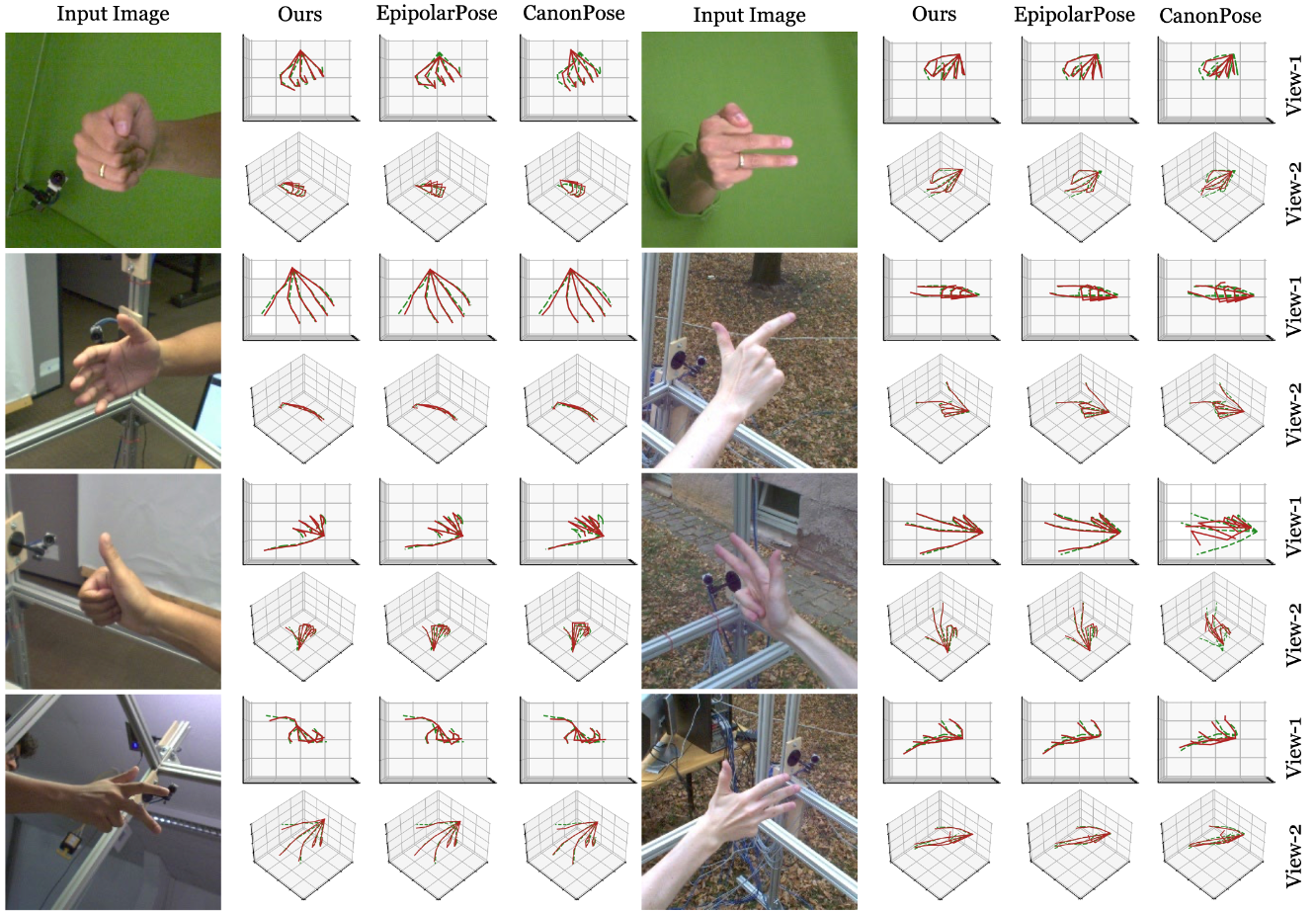

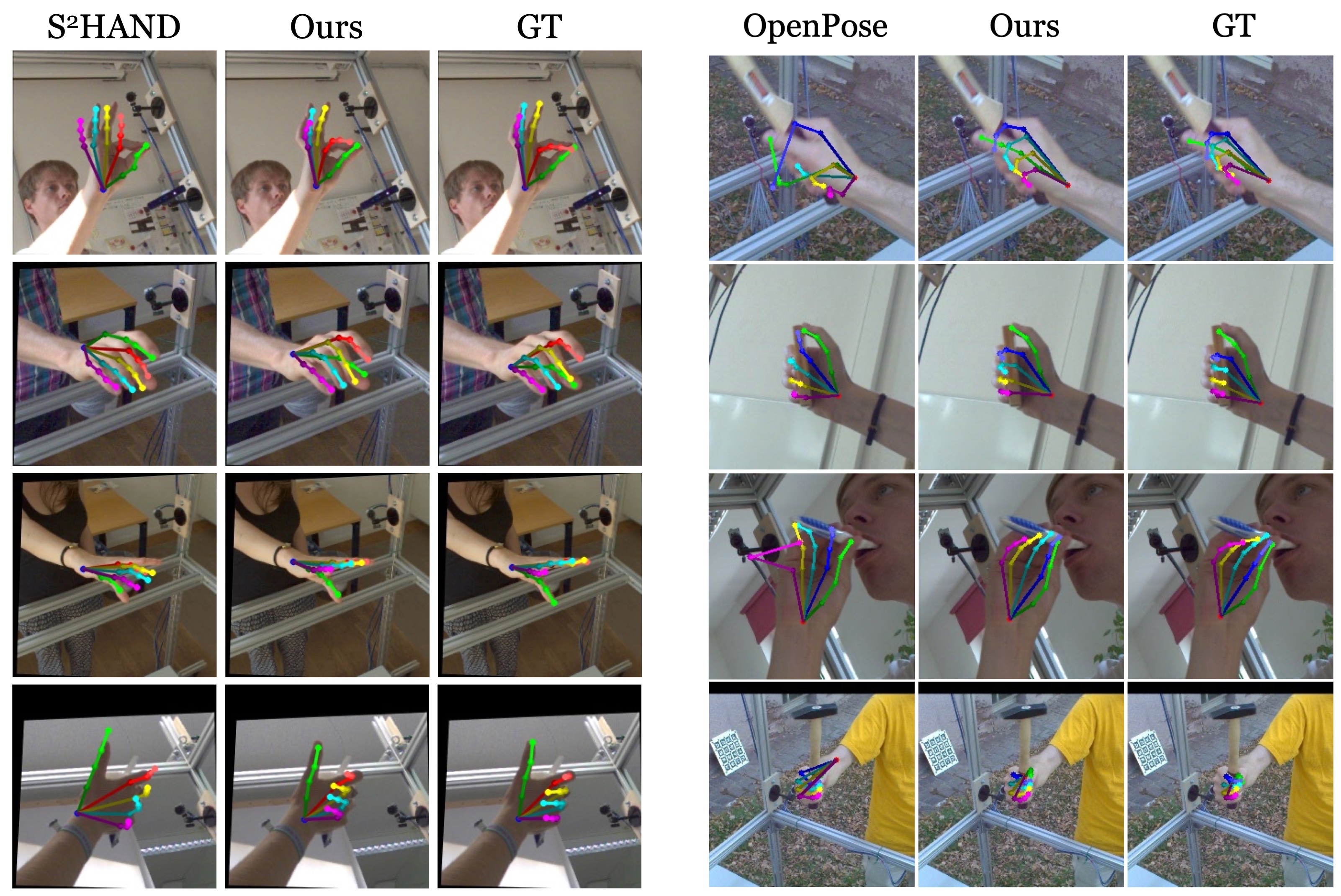

Qualitative result

BibTeX

@inproceedings{zheng2023hamuco,

title={HaMuCo: Hand Pose Estimation via Multiview Collaborative Self-Supervised Learning},

author={Zheng, Xiaozheng and Wen, Chao and Xue, Zhou and Ren, Pengfei and Wang, Jingyu},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2023}}